Changelog History

Page 2

-

v1.3.4 Changes

March 26, 2018🚀 The v1.3.4 release of Zstandard is focused on performance, and offers nice speed boost in most scenarios.

0️⃣ Asynchronous compression by default for

zstdCLI0️⃣

zstdcli will now performs compression in parallel with I/O operations by default. This requires multi-threading capability (which is also enabled by default).

🐎 It doesn't sound like much, but effectively improves throughput by 20-30%, depending on compression level and underlying I/O performance.0️⃣ For example, on a Mac OS-X laptop with an Intel Core i7-5557U CPU @ 3.10GHz, running

time zstdenwik9at default compression level (2) on a SSD gives the following :Version real time 1.3.3 9.2s 1.3.4 --single-thread 8.8s 1.3.4 (asynchronous) 7.5s This is a nice boost to all scripts using

zstdcli, typically in network or storage tasks. The effect is even more pronounced at faster compression setting, since the CLI overlaps a proportionally higher share of compression with I/O.0️⃣ Previous default behavior (blocking single thread) is still available, accessible through

--single-threadlong command. It's also the only mode available when no multi-threading capability is detected.General speed improvements

👍 Some core routines have been refined to provide more speed on newer cpus, making better use of their out-of-order execution units. This is more sensible on the decompression side, and even more so with

gcccompiler.Example on the same platform, running in-memory benchmark

zstd -b1 silesia.tar:Version C.Speed D.Speed 1.3.3 llvm9 290 MB/s 660 MB/s 1.3.4 llvm9 304 MB/s 700 MB/s (+6%) 1.3.3 gcc7 280 MB/s 710 MB/s 1.3.4 gcc7 300 MB/s 890 MB/s (+25%) Faster compression levels

✅ So far, compression level 1 has been the fastest one available. Starting with v1.3.4, there will be additional choices. Faster compression levels can be invoked using negative values.

💻 On the command line, the equivalent one can be triggered using--fast[=#]command.📜 Negative compression levels sample data more sparsely, and disable Huffman compression of literals, translating into faster decoding speed.

It's possible to create one's own custom fast compression level

by using strategyZSTD_fast, increasingZSTD_p_targetLengthto desired value,

and turning on or off literals compression, usingZSTD_p_compressLiterals.🐎 Performance is generally on par or better than other high speed algorithms. On below benchmark (compressing

silesia.taron an Intel Core i7-6700K CPU @ 4.00GHz) , it ends up being faster and stronger on all metrics compared withquicklzandsnappyat--fast=2. It also compares favorably tolzowith--fast=3.lz4still offers a better speed / compression combo, withzstd --fast=4approaching close.name ratio compression decompression zstd 1.3.4 --fast=5 1.996 770 MB/s 2060 MB/s lz4 1.8.1 2.101 750 MB/s 3700 MB/s zstd 1.3.4 --fast=4 2.068 720 MB/s 2000 MB/s zstd 1.3.4 --fast=3 2.153 675 MB/s 1930 MB/s lzo1x 2.09 -1 2.108 640 MB/s 810 MB/s zstd 1.3.4 --fast=2 2.265 610 MB/s 1830 MB/s quicklz 1.5.0 -1 2.238 540 MB/s 720 MB/s snappy 1.1.4 2.091 530 MB/s 1820 MB/s zstd 1.3.4 --fast=1 2.431 530 MB/s 1770 MB/s zstd 1.3.4 -1 2.877 470 MB/s 1380 MB/s brotli 1.0.2 -0 2.701 410 MB/s 430 MB/s lzf 3.6 -1 2.077 400 MB/s 860 MB/s zlib 1.2.11 -1 2.743 110 MB/s 400 MB/s Applications which were considering Zstandard but were worried of being CPU-bounded are now able to shift the load from CPU to bandwidth on a larger scale, and may even vary temporarily their choice depending on local conditions (to deal with some sudden workload surge for example).

Long Range Mode with Multi-threading

🚀 zstd-1.3.2 introduced the long range mode, capable to deduplicate long distance redundancies in a large data stream, a situation typical in backup scenarios for example. But its usage in association with multi-threading was discouraged, due to inefficient use of memory.

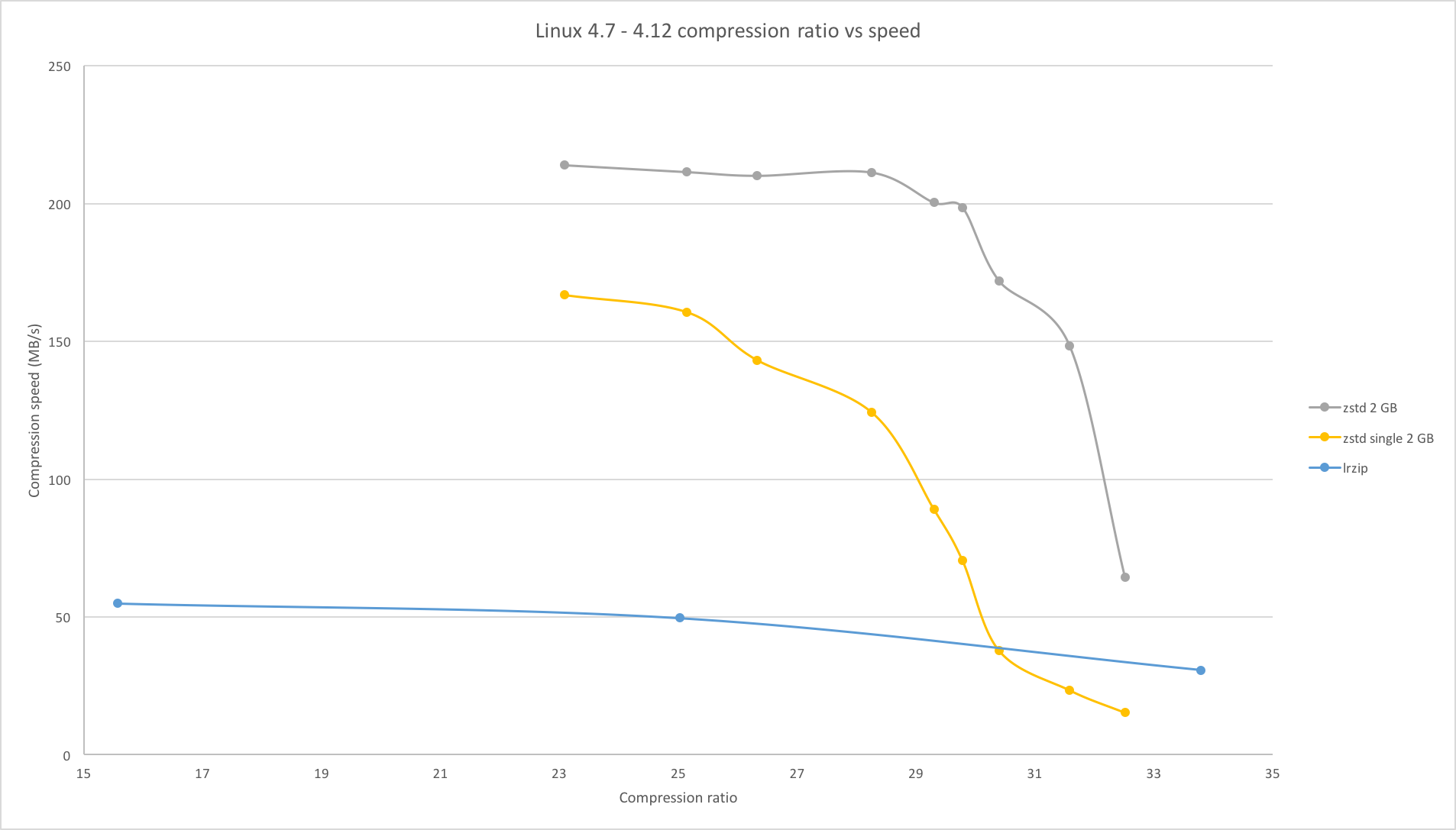

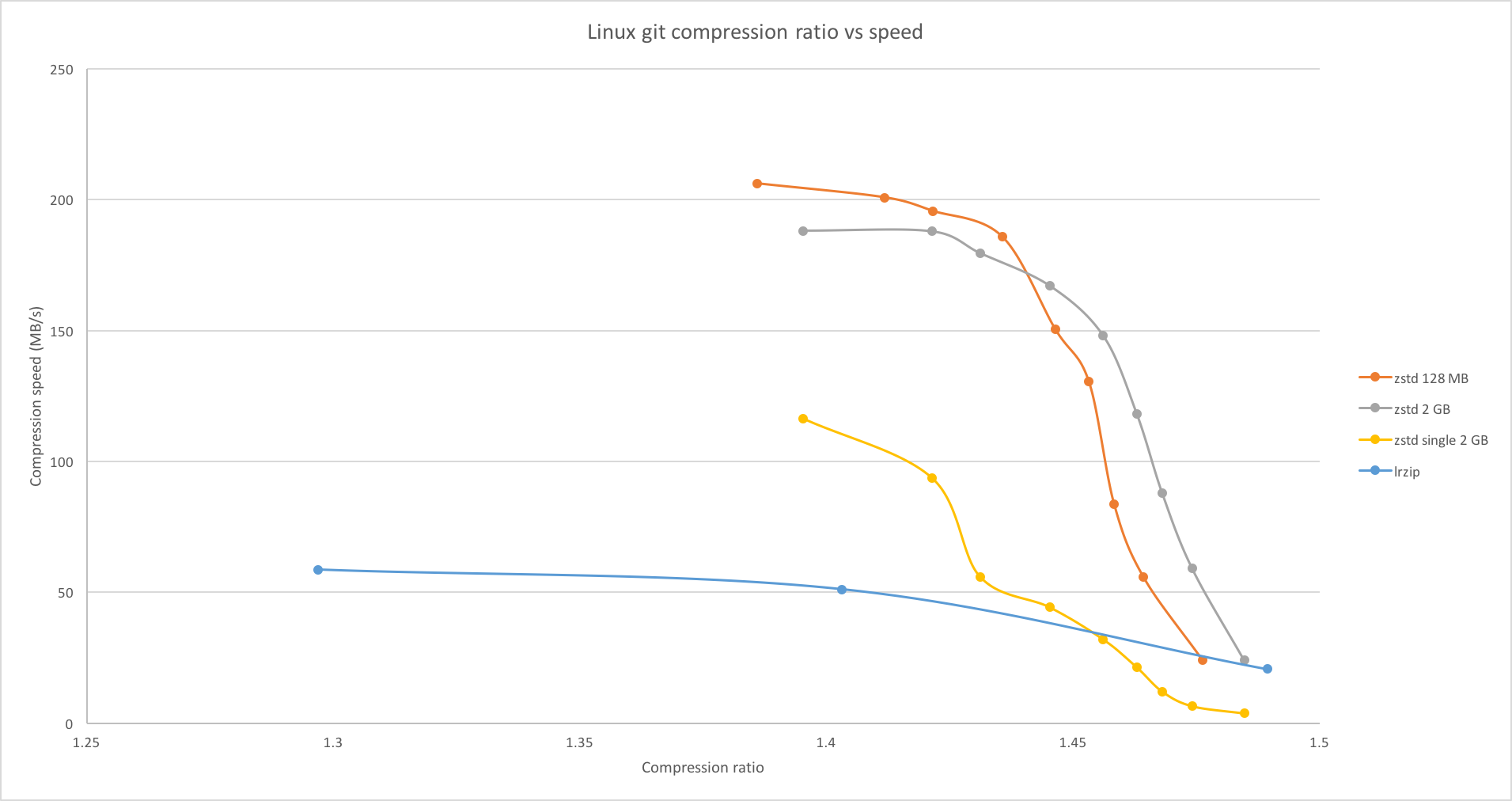

zstd-1.3.4 solves this issue, by making long range match finder run in serial mode, like a pre-processor, before passing its result to backend compressors (regular zstd). Memory usage is now bounded to the maximum of the long range window size, and the memory that zstdmt would require without long range matching. As the long range mode runs at about 200 MB/s, depending on the number of cores available, it's possible to tune compression level to match the LRM speed, which becomes the upper limit.zstd -T0 -5 --long file # autodetect threads, level 5, 128 MB windowzstd -T16 -10 --long=31 file # 16 threads, level 10, 2 GB window🚀 As illustration, benchmarks of the two files "Linux 4.7 - 4.12" and "Linux git" from the 1.3.2 release are shown below. All compressors are run with 16 threads, except "zstd single 2 GB".

zstdcompressors are run with either a 128 MB or 2 GB window size, andlrzipcompressor is run withlzo,gzip, andxzbackends. The benchmarks were run on a 16 core Sandy Bridge @ 2.2 GHz.The association of Long Range Mode with multi-threading is pretty compelling for large stream scenarios.

Miscellaneous

🚀 This release also brings its usual list of small improvements and bug fixes, as detailed below :

- perf: faster speed (especially decoding speed) on recent cpus (haswell+)

- 🐎 perf: much better performance associating

--longwith multi-threading, by @terrelln - 👍 perf: better compression at levels 13-15

- 0️⃣ cli : asynchronous compression by default, for faster experience (use

--single-threadfor former behavior) - cli : smoother status report in multi-threading mode

- cli : added command

--fast=#, for faster compression modes - cli : fix crash when not overwriting existing files, by Pádraig Brady (@pixelb)

- 👷 api :

nbThreadsbecomesnbWorkers: 1 triggers asynchronous mode - api : compression levels can be negative, for even more speed

- api :

ZSTD_getFrameProgression(): get precise progress status of ZSTDMT anytime - api : ZSTDMT can accept new compression parameters during compression

- api : implemented all advanced dictionary decompression prototypes

- 🏗 build: improved meson recipe, by Shawn Landden (@shawnl)

- 🏗 build: VS2017 scripts, by @HaydnTrigg

- 🛠 misc: all

/contribprojects fixed - 🐳 misc: added

/contrib/dockerscript by @gyscos

-

v1.3.3 Changes

December 21, 2017🛠 This is bugfix release, mostly focused on cleaning several detrimental corner cases scenarios.

⬆️ It is nonetheless a recommended upgrade.🔄 Changes Summary

- perf: improved

zstd_optstrategy (levels 16-19) - 🛠 fix : bug #944 : multithreading with shared ditionary and large data, reported by @gsliepen

- cli : change :

-ocan be combined with multiple inputs, by @terrelln - 0️⃣ cli : fix : content size written in header by default

- 👍 cli : fix : improved LZ4 format support, by @felixhandte

- cli : new : hidden command

-b -S, to benchmark multiple files and generate one result per file - api : change : when setting

pledgedSrcSize, useZSTD_CONTENTSIZE_UNKNOWNmacro value to mean "unknown" - 👍 api : fix : support large skippable frames, by @terrelln

- api : fix : re-using context could result in suboptimal block size in some corner case scenarios

- api : fix : streaming interface was adding a useless 3-bytes null block to small frames

- 🏗 build: fix : compilation under rhel6 and centos6, reported by @pixelb

- 🏗 build: added

checktarget - 🏗 build: improved meson support, by @shawnl

- perf: improved