LZ4 v1.8.2 Release Notes

Release Date: 2018-05-07 // almost 6 years ago-

🚀 LZ4 v1.8.2 is a performance focused release, featuring important improvements for small inputs, especially when coupled with dictionary compression.

General speed improvements

👍 LZ4 decompression speed has always been a strong point. In v1.8.2, this gets even better, as it improves decompression speed by about 10%, thanks in a large part to suggestion from @svpv .

💻 For example, on a Mac OS-X laptop with an Intel Core i7-5557U CPU @ 3.10GHz,

0️⃣ runninglz4 -bsilesia.tarcompiled with default compilerllvm v9.1.0:Version v1.8.1 v1.8.2 Improvement Decompression speed 2490 MB/s 2770 MB/s +11% Compression speeds also receive a welcomed boost, though improvement is not evenly distributed, with higher levels benefiting quite a lot more.

Version v1.8.1 v1.8.2 Improvement lz4 -1 504 MB/s 516 MB/s +2% lz4 -9 23.2 MB/s 25.6 MB/s +10% lz4 -12 3.5 Mb/s 9.5 MB/s +170% Should you aim for best possible decompression speed, it's possible to request LZ4 to actively favor decompression speed, even if it means sacrificing some compression ratio in the process. This can be requested in a variety of ways depending on interface, such as using command

--favor-decSpeedon CLI. This option must be combined with ultra compression mode (levels 10+), as it needs careful weighting of multiple solutions, which only this mode can process.

The resulting compressed object always decompresses faster, but is also larger. Your mileage will vary, depending on file content. Speed improvement can be as low as 1%, and as high as 40%. It's matched by a corresponding file size increase, which tends to be proportional. The general expectation is 10-20% faster decompression speed for 1-2% bigger files.Filename decompression speed --favor-decSpeedSpeed Improvement Size change silesia.tar 2870 MB/s 3070 MB/s +7 % +1.45% dickens 2390 MB/s 2450 MB/s +2 % +0.21% nci 3740 MB/s 4250 MB/s +13 % +1.93% osdb 3140 MB/s 4020 MB/s +28 % +4.04% xml 3770 MB/s 4380 MB/s +16 % +2.74% Finally, variant

LZ4_compress_destSize()also receives a ~10% speed boost, since it now internally redirects toward primary internal implementation of LZ4 fast mode, rather than relying on a separate custom implementation. This allows it to take advantage of all the optimization work that has gone into the main implementation.Compressing small contents

🚀 When compressing small inputs, the fixed cost of clearing the compression's internal data structures can become a significant fraction of the compression cost. This release adds a new way, under certain conditions, to perform this initialization at effectively zero cost.

🆕 New, experimental LZ4 APIs have been introduced to take advantage of this functionality in block mode:

LZ4_resetStream_fast()LZ4_compress_fast_extState_fastReset()LZ4_resetStreamHC_fast()LZ4_compress_HC_extStateHC_fastReset()

More detail about how and when to use these functions is provided in their respective headers.

LZ4 Frame mode has been modified to use this faster reset whenever possible.

LZ4F_compressFrame_usingCDict()prototype has been modified to additionally take anLZ4F_CCtx*context, so it can use this speed-up.Efficient Dictionary compression

👌 Support for dictionaries has been improved in a similar way: they can now be used in-place, which avoids the expense of copying the context state from the dictionary into the working context. Users are expect to see a noticeable performance improvement for small data.

Experimental prototypes (

LZ4_attach_dictionary()andLZ4_attach_HC_dictionary()) have been added to LZ4 block API using a loaded dictionary in-place. LZ4 Frame API users should benefit from this optimization transparently.🐎 The previous two changes, when taken advantage of, can provide meaningful performance improvements when compressing small data. Both changes have no impact on the produced compressed data. The only observable difference is speed.

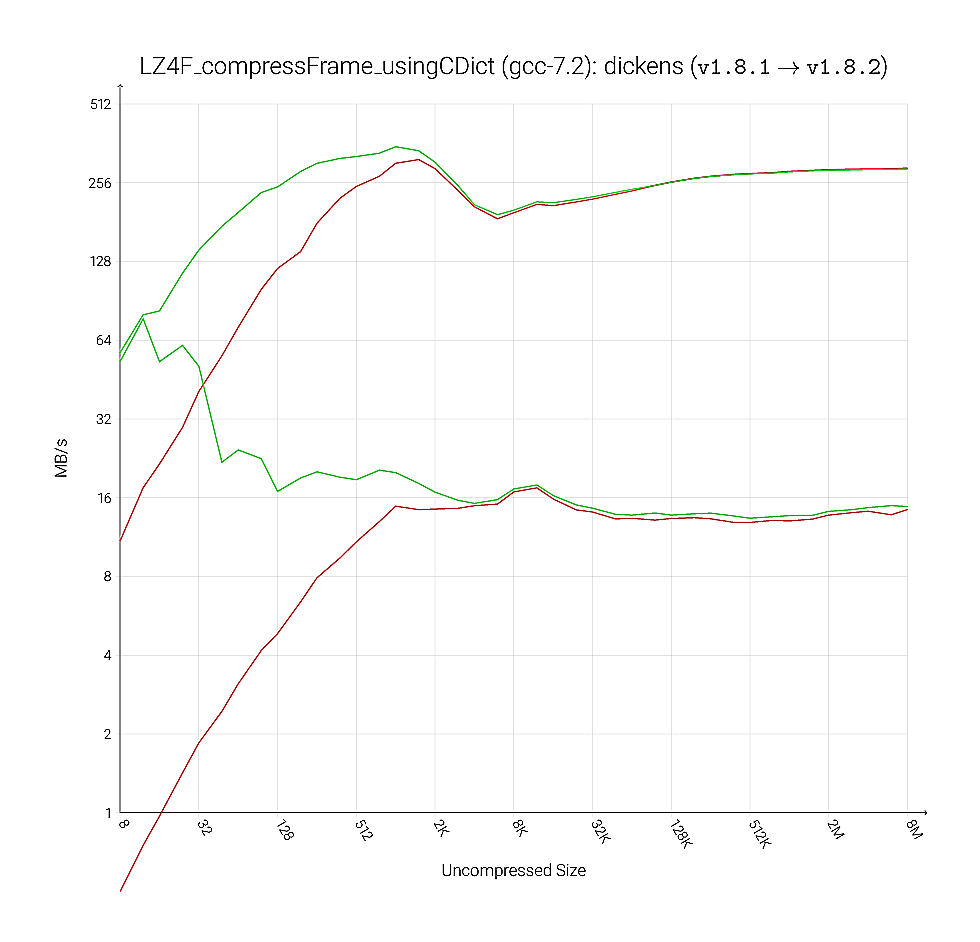

🚀 This is a representative graphic of the sort of speed boost to expect. The red lines are the speeds seen for an input blob of the specified size, using the previous LZ4 release (v1.8.1) at compression levels 1 and 9 (those being, fast mode and default HC level). The green lines are the equivalent observations for v1.8.2. This benchmark was performed on the Silesia Corpus. Results for the

dickenstext are shown, other texts and compression levels saw similar improvements. The benchmark was compiled with GCC 7.2.0 with-O3 -march=native -mtune=native -DNDEBUGunder Linux 4.6 and run on anIntel Xeon CPU E5-2680 v4 @ 2.40GHz.🗄

lz4frame_static.hDeprecationThe content of

lz4frame_static.hhas been folded intolz4frame.h, hidden by a macro guard "#ifdef LZ4F_STATIC_LINKING_ONLY". This meanslz4frame.hnow matcheslz4.handlz4hc.h.lz4frame_static.his retained as a shell that simply sets the guard macro and includeslz4frame.h.🔄 Changes list

🚀 This release also brings an assortment of small improvements and bug fixes, as detailed below :

- perf: faster compression on small files, by @felixhandte

- perf: improved decompression speed and binary size, by Alexey Tourbin (@svpv)

- perf: faster HC compression, especially at max level

- perf: very small compression ratio improvement

- 🛠 fix : compression compatible with low memory addresses (

< 0xFFFF) - 🛠 fix : decompression segfault when provided with

NULLinput, by @terrelln - cli : new command

--favor-decSpeed - cli : benchmark mode more accurate for small inputs

- fullbench : can bench

_destSize()variants, by @felixhandte - 📜 doc : clarified block format parsing restrictions, by Alexey Tourbin (@svpv)